Science & Quality

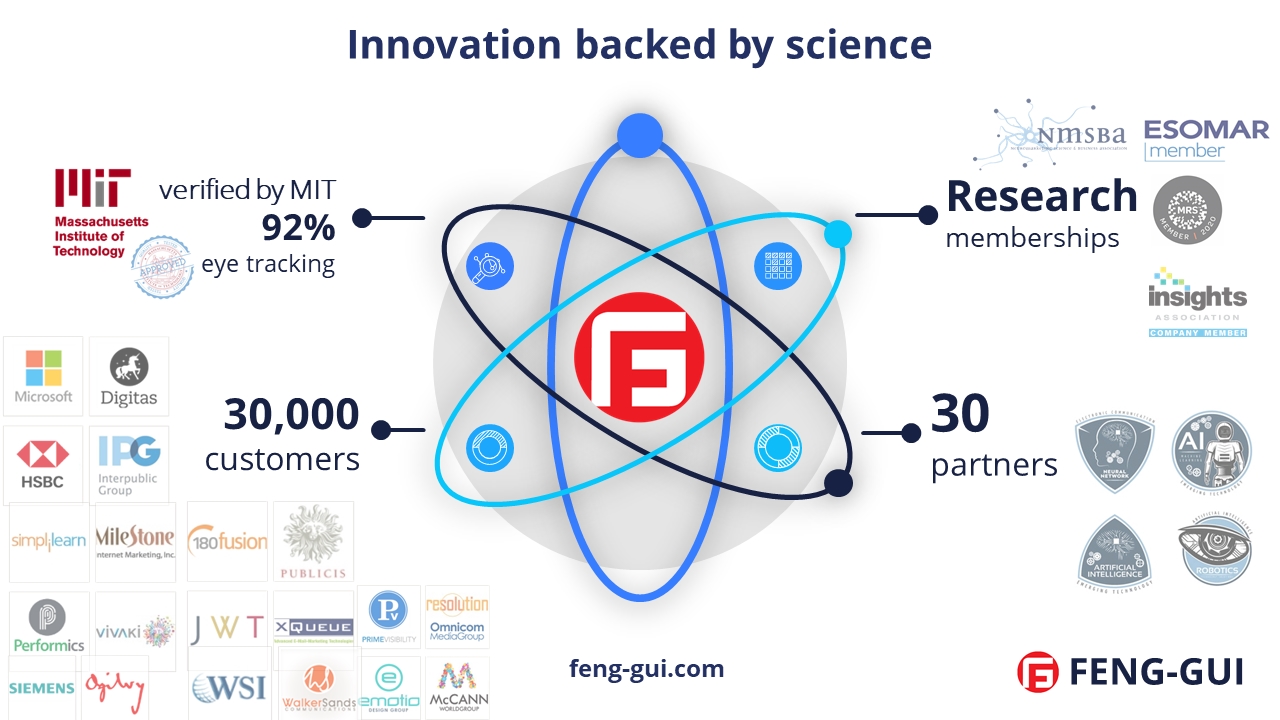

Feng-GUI delivers reports with 92% accuracy, mirroring a 5-second eye-tracking test with 40 people.Powered by dozens of neuroscience-driven algorithms, our analysis simulates natural vision, attention, perception, and cognition, transforming human science into actionable design insights.

How accurate is it?

Since 2007, our analysis has been trained and refined on billions of data points from tens of thousands of eye tracking experiments.

- Cutting edge: constantly updated with the latest vision science

- Precise: powered by live eye tracking benchmarks

- Accurate: free from test bias and unreliable subjects

Feng-GUI's cutting-edge AI algorithms

which have been at the forefront of predictive analytics since 2007.The science behind Feng-GUI

Cognitive science explains how the brain perceives and processes external stimuli. By applying these principles, Feng-GUI reveals how users truly see and respond to your designs. Each report highlights specific aspects of brain activity and shows how cognitive science can be leveraged to communicate more effectively.

- Live eye tracking: real user eye paths across webpages and media

- Neuroscientific research: how the brain processes and prioritizes visual data

- Cognitive science: how human behavior shapes perception of design

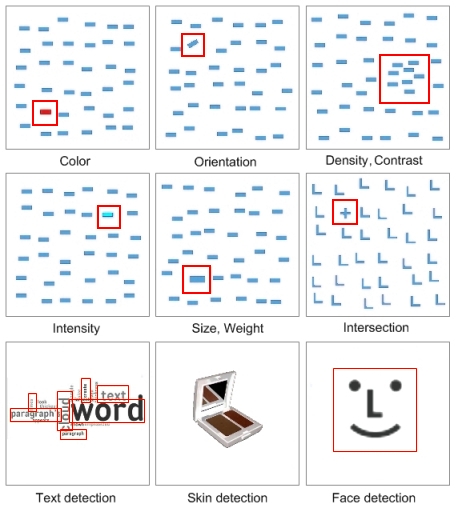

Analysis Methodologies included:

- Neuro Biological implementation of brain and eyes

- Feature Integration Theory

- Saliency and Computational Attention algorithms

- Bottom-Up and Top-Down algorithms

- Face Detection

- Multilingual Text Detection

- Skin Detection

- Aesthetic - Complexity vs. Focus

- Facial Expression and emotions. detecting 8 facial expressions

- Emotion Detection - Approach vs. Withdraw

- Object detection and recognition. Automatic creation of areas of interest.

- Machine Learning Models for Precise Predictive Analytics.

- Generative AI system - Generating Insights and Recommendations report with third-party AI platforms, such as OpenAI and Google Gemini.

visual features examples

Video analysis detects additional visual features such as: flicker and direction and velocity of motion between frames.

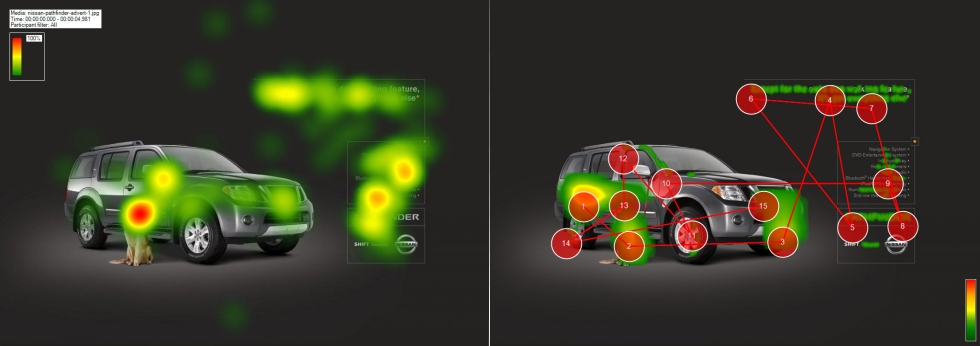

Eye Tracking Comparison

You may find more eye tracking comparison examples at the Reports Gallery https://feng-gui.com/galleryTest and Tune

We continuously measure, benchmark, and refine our analysis using public eye-tracking datasets containing thousands of images reviewed by hundreds of participants.The Massachusetts Institute of Technology (MIT), the world’s leading technology university, has validated the accuracy of the Feng-GUI attention algorithm as part of its Saliency Benchmark.

read more...

Feng-GUI stands at the intersection of scientific rigor and real-world performance, ensuring results you can trust.

Here is a partial list of the datasets.

| Dataset | Citation |

| Image Datasets | |

| MIT data set | Tilke Judd, Krista Ehinger, Fredo Durand, Antonio Torralba. Learning to Predict where Humans Look [ICCV 2009] |

| FIGRIM Fixation Dataset | Zoya Bylinskii, Phillip Isola, Constance Bainbridge, Antonio Torralba, Aude Oliva. Intrinsic and Extrinsic Effects on Image Memorability [Vision Research 2015] |

| MIT Saliency Benchmark | Z. Bylinskii, T. Judd, A. Borji, L. Itti, F. Durand, A. Oliva, and A. Torralba. MIT Saliency Benchmark. |

| MIT300 | T. Judd, F. Durand, A. Torralba. A Benchmark of Computational Models of Saliency to Predict Human Fixations [MIT tech report 2012] |

| CAT2000 | Ali Borji, Laurent Itti. CAT2000: A Large Scale Fixation Dataset for Boosting Saliency Research [CVPR 2015 workshop on "Future of Datasets"] |

| Coutrot Database 1 | Antoine Coutrot, Nathalie Guyader. How saliency, faces, and sound influence gaze in dynamic social scenes [JoV 2014] Antoine Coutrol, Nathalie Guyader. Toward the introduction of auditory information in dynamic visual attention models [WIAMIS 2013] |

| SAVAM | Yury Gitman, Mikhail Erofeev, Dmitriy Vatolin, Andrey Bolshakov, Alexey Fedorov. Semiautomatic Visual-Attention Modeling and Its Application to Video Compression [ICIP 2014] |

| Eye Fixations in Crowd (EyeCrowd) data set | Ming Jiang, Juan Xu, Qi Zhao. Saliency in Crowd [ECCV 2014] |

| Fixations in Webpage Images (FiWI) data set | Chengyao Shen, Qi Zhao. Webpage Saliency [ECCV 2014] |

| VIU data set | Kathryn Koehler, Fei Guo, Sheng Zhang, Miguel P. Eckstein. What Do Saliency Models Predict? [JoV 2014] |

| Object and Semantic Images and Eye-tracking (OSIE) data set | Juan Xu, Ming Jiang, Shuo Wang, Mohan Kankanhalli, Qi Zhao. Predicting Human Gaze Beyond Pixels [JoV 2014] |

| VIP data set | Keng-Teck Ma, Terence Sim, Mohan Kankanhalli. "A Unifying Framework for Computational Eye-Gaze Research [Workshop on Human Behavior Understanding 2013]" |

| MIT Low-resolution data set | Tilke Judd, Fredo Durand, Antonio Torralba. Fixations on Low-Resolution Images [JoV 2011] |

| KTH Koostra data set | Gert Kootstra, Bart de Boer, Lambert R. B. Schomaker. Predicting Eye Fixations on Complex Visual Stimuli using Local Symmetry [Cognitive Computation 2011] |

| NUSEF data set | Subramanian Ramanathan, Harish Katti, Nicu Sebe, Mohan Kankanhalli, Tat-Seng Chua. An eye fixation database for saliency detection in images [ECCV 2010] |

| TUD Image Quality Database 2 | H. Alers, H. Liu, J. Redi and I. Heynderickx. Studying the risks of optimizing the image quality in saliency regions at the expense of background content [SPIE 2010] |

| Ehinger data set | Krista Ehinger, Barbara Hidalgo-Sotelo, Antonio Torralba, Aude Oliva. Modeling search for people in 900 scenes [Visual Cognition 2009] |

| A Database of Visual Eye Movements (DOVES) | Ian van der Linde, Umesh Rajashekar, Alan C. Bovik, Lawrence K. Cormack. DOVES: A database of visual eye movements [Spatial Vision 2009] |

| TUD Image Quality Database 1 | H. Liu and I. Heynderickx. Studying the Added Value of Visual Attention in Objective Image Quality Metrics Based on Eye Movement Data [ICIP 2009] |

| Visual Attention for Image Quality (VAIQ) Database | Ulrich Engelke, Anthony Maeder, Hans-Jurgen Zepernick. Visual Attention Modeling for Subjective Image Quality Databases [MMSP 2009] |

| Toronto data set | Neil Bruce, John K. Tsotsos. Attention based on information maximization [JoV 2007] |

| Fixations in Faces (FiFA) data base | Moran Cerf, Jonathan Harel, Wolfgang Einhauser, Christof Koch. Predicting human gaze using low-level saliency combined with face detection [NIPS 2007] |

| Le Meur data set | Olivier Le Meur, Patrick Le Callet, Dominique Barba, Dominique Thoreau. A coherent computational approach to model the bottom-up visual attention [PAMI 2006] |

| Complexity Datasets | |

| IC9600 | The IC9600 dataset is a large-scale benchmark for automatic image complexity assessment |

| SAVOIAS | The SAVOIAS dataset a visual complexity dataset comprising over 1,400 images from seven distinct categories: Scenes, Advertisements, Visualizations, Objects, Interior Design, Art, and Suprematism. |

| VISC | The VISC (Visual Complexity) dataset contains 800 images with human-rated complexity scores |

| RSIVL | The RSIVL (Rapid Scene Image and Visual Lexicon) dataset includes 49 images with subjective complexity ratings |

| Video Datasets | |

| Hollywood-2 | These actions are collected from a set of 69 Hollywood movies. It consists of about 487k frames totalling about 20 hours of video and is split into a training set of 823 sequences and a test of 884 sequences. |

| UCF Sports | It contains 150 videos covering 9 sports action classes: diving, golf-swinging, kicking, lifting, horseback riding, running, skateboarding, swinging and walking. |

| DIEM | 250 participants watching 85 different videos |

| LEDOV | LEDOV includes 538 videos with diverse content, containing a total of 179,336 frames and 6,431 seconds. 32 participants (18 males and 14 females), aging from 20 to 56 (32 on average) |

| CRCNS | dataset consists of a body of 520 human eye-tracking data traces obtained while normal, young adult human volunteers freely watched complex video stimuli (TV programs, outdoors videos, video games). The dataset comprises eye movement recordings from eight distinct subjects watching 50 different video clips |

| SFU | 12 video sequences |

| Aesthetic Datasets | |

| AVA | AVA: A large-scale database for aesthetic visual analysis It contains over 250,000 images along with a rich variety of meta-data including a large number of aesthetic scores for each image. |

| TID2013 | TID2013 is intended for evaluation of full-reference image visual quality assessment metrics. TID2013 is a dataset for image quality assessment that contains 25 reference images and 3000 distorted images (25 reference images x 24 types of distortions x 5 levels of distortions). |

| LIVE | Live: Laboratory for Image & Video Engineering. Image Quality Assessment Database |

| Datasets aesthetic annotations |

Photo.net - 3,581 images ImageCLEF CUHK - 12,000 images CUHKPQ - 17,613 images |

| VisAWI |

* VisAWI Manual - Visual Aesthetics of Websites Inventory * VisAWI-S - Short Visual Aesthetics of Websites Inventory Moshagen and Thielsch 2010 provides users' evaluation data according to the two main aesthetics dimensions in HCI design: clarity and expressive Feedback: Clarity, Likeability, Informativeness, Credibility, 1280 websites, 764 people Ages ranged from 17 to 79 years, 61.8% female 37.7% male, 66.0% University education level |

| Reinecke | Quantifying Visual Preferences Around the World Katharina Reinecke and Krzysztof Z. Gajos 430 Web sites, 40,000 people, 2.4 million ratings |